Synthetic Data is Here

What do you think of when you hear the word ‘data’? A lot of the time, we may chalk ‘data’ up to numbers and figures collected from real-life sources, but let’s talk about another aspect in the game: synthetic data.

This past week, “This Week in Innovation” podcast host, Jeff Roster, sat down with Brian Sathianathan, Solomon Ray, and Shomron Jacob to explore the topic of synthetic data. They covered how retailers and companies outside of the technology space could leverage synthetic data to gather more insight about their consumer base, the challenges they would encounter upon collecting and using it, and its future implications for enterprises around the world.

Data is the oil that runs the machine, Sathianathan states. You want the highest quality of it. Data is a critical component to an organization’s success, as it allows them to visualize relationships between what is happening in different locations, departments, and systems.

What is synthetic data?

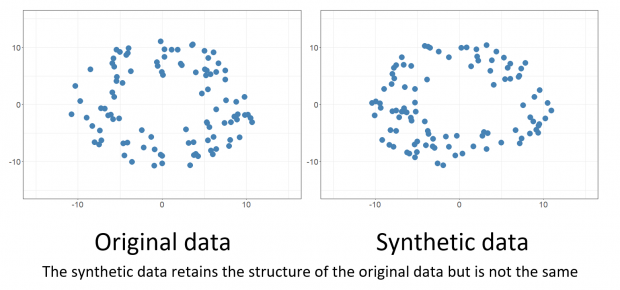

Synthetic data is data that is artificially created rather than generated by actual events. One method is to identify correlations between variables in a data set and output synthetic data from random observations with the same properties. Even deeper, generative adversarial networks (GAN) can be deployed to both generate data sets in the form of images and videos. In short, it is data that may not be collected from true sources. Instead, it is created. Still, synthetic data looks natural in a real environment. Synthetic data mirrors a set of actual data as closely as possible. For example, a synthetic data set of a university student population would have roughly the same number of “students” and backgrounds, but wouldn’t contain any of the students’ real data.

It’s no longer necessary to go through the painstaking process of collecting specific data from each and every possible sample. Using synthetic data can streamline the process while preserving the essence of true data.

The role of synthetic data

Synthetic data is a method of combating the lack of data by compensating the existing datasets with high accuracy. In other words, synthetic data fills in the gaps while allowing organizations to conserve the security systems in place.

The following are three main purposes of synthetic data:

- Privacy – Many online organizations and retailers limit the amount of customer data they can collect and use for legal, security, and privacy reasons. To abate the lack of data, retailers can use synthetic data to augment customer data to preserve the accuracy of the data without over masking it the way traditional data atomization methods do.

- Product Testing – Data is needed for testing a product to be released; however such data either does not exist or is not available to the testers.

- Training ML Models – Training data is needed for machine learning algorithms. However, especially in the case of self-driving cars, such data is expensive to generate in real life.

Real-world usage

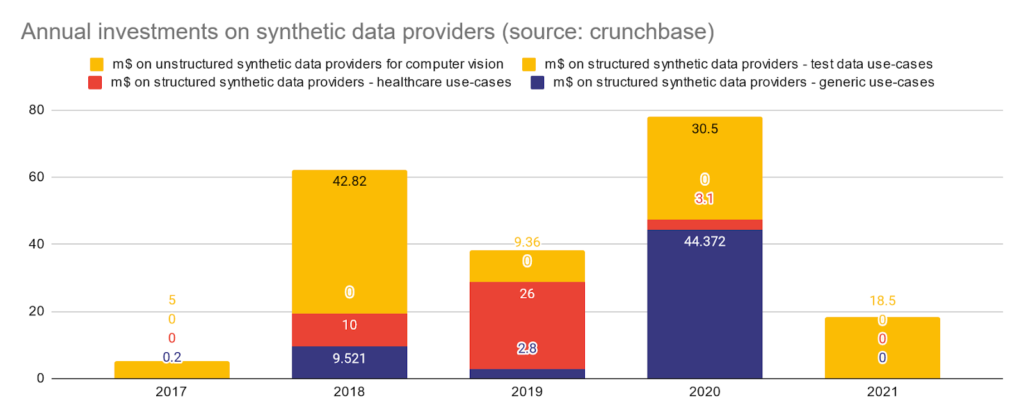

Data is extremely valuable, and companies need it to make informed business decisions. $78 million is currently being invested into this market space. These markets include the following: data providers that provide data to AI platforms, AI platform markets such as data systems that are used to generate data, and AI data training markets.

With all these markets, who has the power? Retailers get a ton of leverage out of synthetic data. Organizations can work with data platform enablers and customer data platforms, as well as platforms that provide AI platforms (such as Interplay), or providers who can provide big data platforms and management. A typical vendor could be anything from a startup to a larger, fully established company. In working with these organizations, retailers and brands can own their own models, data, AND synthetic data.

Synthetic data can help companies get on a level playing field with other competitors. Newer entrants such as startups may struggle to catch up to older organizations simply due to the fact that they do not have the same amount of data. Incumbent organizations that may not have the same amount of new data as newer digitally-native organizations can catch up as well. With synthetic data, on both sides of the coin, they can create the data they need.

Synthetic data is put to use across all kinds of industries. Let’s take a look at the beauty, insurance, and healthcare industries.

- In the beauty industry, it’s critical to match products to a variety of skin and hair tones. To create algorithms to do so, engineers require data of all these different shades. Typically, this could be hard to get, especially among a subset of potential customers that may have some inherent bias (e.g. a company based in China would have data with 95% black hair). With synthetic data, various different skin tones, eye shapes, and facial features can be manufactured using synthetic data GAN methodologies to fill in any “gaps” that may come out of a biased training data set.

- The insurance industry uses data collection to model risks, identify new markets, and reduce fraud incidence. In insurance data, the lack of information about specific demographic sets (e.g. reported income level and gender) can be corrected to more closely match real world demographics by using synthetic data techniques such as modifying class weights, penalizing the models, using anomaly detection techniques, oversampling and undersampling.

- The health industry should take note of synthetic data. Synthetic data helps train the AI models to detect abnormalities in medical images. Transfer learning is a ML technique that can be used to apply detection models in different places. For example, AI detection of cancer cells in the lungs (trained with Synthetic Data) can use transfer learning to detect cancer cells in the brain.

The Market Value

Currently, under 5% of Tier 1 retailers are using synthetic data. The big reason behind this is because the application of synthetic data is still in the early experimentation phase with only around 60 vendors in the space. However, most businesses are brainstorming methods of how synthetic data can be applied to solve their business problems.

R&D suggests that synthetic data not only reduces time spent on gathering data and generating it, but can also reduce bias in data. In addition, synthetic data is highly scalable and can be used to rapidly generate models. The downside? The synthetic data market is (as of Summer 2021) expensive, and still needs a lot of money and resources to successfully incorporate it into business operations.

The coming transformation

Today, we find ourselves at the base level of generating synthetic data, a process made easier in a low-code environment such as Interplay. The platform allows businesses to insert data files with its drag and drop environment and train models using domain transfer techniques. Our team is currently working on methods to remove bias in data. In the coming years, we believe there will be a transformation in data generation, where models will be developed to generate data. This leads to the most exciting phase yet: having the ability to rely on models to write code to generate synthetic data.

Addressing the bumps in the road

As thrilling as it is to hyper-fixate on what could be for synthetic data, there are challenges that require attention.

In the retail industry, we will more than likely see specialized skill sets when dealing with synthetic data. Artificial Intelligence and Big Data teams are currently working with vendors to solve data problems, and will use data to help them understand their customer base, forecast sales, analyze traffic, and so on. Models will analyze and determine how much data companies have about customers, and dissect information from it.

In addition, synthetic data requires true investment from an organization. It’s not simple to implement. A company will need to have data-literate personnel, AI engineers, and the data infrastructure to implement generation techniques for synthetic data.

Knowledge is Power

Francis Bacon once said, “knowledge is power”, insighting that with knowledge and/or education, one’s ability to succeed in the pursuit of one’s objectives will certainly increase. Synthetic data is a relatively new concept, and we’re only scraping the surface of its unrealized potential. From synthetic data, we might be able to accelerate and enhance our AI, which, to build on Sir Bacon’s aphorism, could become a sort of synthetic knowledge that leads to very real success. As mentioned previously, synthetic data is extremely scalable and still requires a fair amount of training in order to deploy correctly. However, the toolsets and platforms are evolving rapidly, and synthetic data should grow in significance.

If you found this piece about synthetic data insightful, visit our blog page to read up on other topics we have covered, and contact us here if you’re interested in learning about the services we provide!

Our Innovation Blog

Stay ahead of trends with insights from iterate.ai experts and advisors